The FCE™ Framework: A Fast, Focused Way to Measure AI Value

Learn how to measure AI ROI fast with the FCE™ Framework—track impact using 3 simple scores. Make better decisions

Organisations increasingly turn to artificial intelligence (AI) to drive growth, efficiency, and innovation. Yet without a clear way to measure the impact of AI investments, projects can stall, budgets can balloon, and stakeholders can lose confidence. To address this and make it simple and understandable for everyone I work to set this as FCE™ scorecard framework

The FCE™ AI valuation framework is focusing on:

Financial Return

Customer Experience

Employee Enablement

With just one metric each and a single 0-100 score, FCE delivers clarity, speed, and comprehensive impact.

Why FCE?

Simplicity: Three pillars, one number each. No complex KPIs or debates.

Comprehensiveness: Covers money, market-facing outcomes, and internal productivity.

Speed: Fill in three values; get a clear score and recommendation.

Actionable: Decision bands tell you whether to scale, pause, or improve.

Pillars, Metrics & Weights

Total = sum of all three scores (max 100).

Risk Penalty (optional): −10 if a material AI incident occurred in last 12 months.

Gate Check: No factor may score below 40% of its max (i.e., F ≥20; C ≥10; E ≥10). If any fail, pause and remediate before scaling.

How It Works: Step‑by‑Step

Baseline Measurement: Record pre-AI values for each metric.

Deploy & Measure: After deployment (monthly or quarterly), capture:

Score Calculation: Map each value to its point threshold.

Total & Adjust: Sum the three scores; subtract 10 points if risk applies.

Gate Check: Ensure each factor ≥40% of its max before scaling.

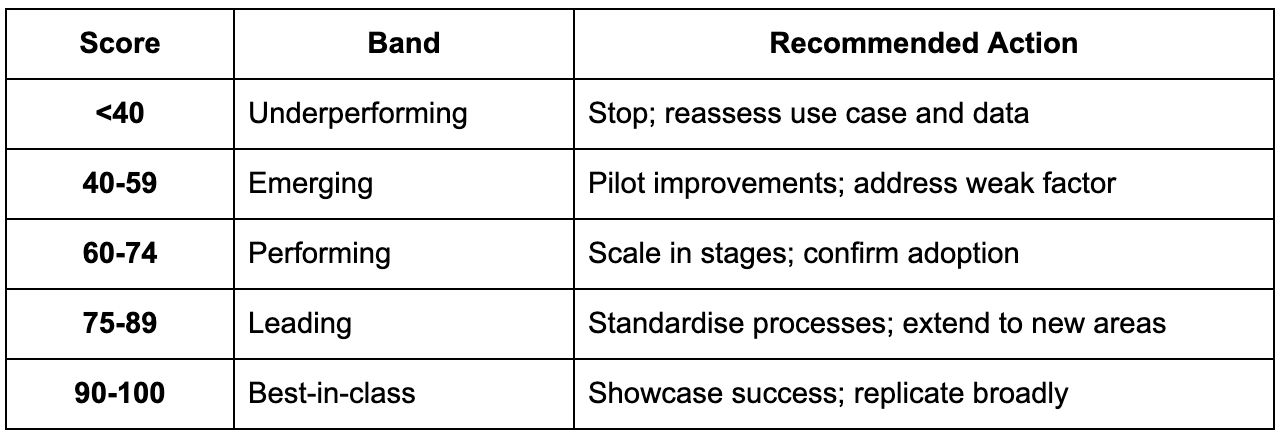

Decision Band: Determine action:

Pillar Deep‑Dive & Examples

1. Financial Return (50 points)

This pillar measures AI’s direct economic impact in black-and-white terms by focusing solely on annual ROI% - the ratio of net benefits (cost savings plus revenue uplift) to costs - to show how much value the initiative generates per dollar spent. The formula is:

ROI% = ((Annual Benefits − Annual Costs) ÷ Annual Costs) × 100

Scoring mapping: ≤0%→0; 1-25%→15; 26-50%→25; 51-100%→35; >100%→50.

A high ROI means the AI pays back more than it cost and quickly builds value; stakeholders can immediately compare alternative initiatives using the same scale.

Example calculation: Suppose a predictive maintenance AI system reduces downtime and saves $300,000 annually in avoided production losses, while the total annualized cost (model development amortized, cloud compute, integration, and monitoring) is $120,000. The ROI% is ((300,000 − 120,000)/120,000) × 100 = (180,000/120,000) × 100 = 150%, which maps to 50 points (the maximum) because it exceeds 100%.

2. Customer Experience (25 points)

This pillar captures AI’s impact on customer loyalty by measuring NPS change, which has a well-documented correlation with revenue growth and retention. The calculation is:

NPS change = Post-AI NPS − Baseline NPS

Scoring mapping: ≤0→0; 1-5→8; 6-10→15; 11-20→21; >20→25. A positive shift shows that customers are more likely to promote the brand, meaning the AI is improving their experience in a way that drives downstream value.

Example calculation: A retail AI personalization engine increases the customer NPS from 32 to 44 (a change of +12). That falls into the 11-20 bucket, which yields 21 points toward the total score.

Edge case: Internal-only AI tools. The problem: The standard Customer Experience pillar assumes an external customer base; for internal-only tools without outward-facing customers, traditional NPS doesn’t apply directly. Instead you can use Internal NPS (iNPS): Ask internal users “How likely are you to recommend this tool to a colleague?” on the 0-10 scale, compute promoters and detractors, then take the change versus baseline. Score the delta using the same thresholds as external NPS.\

3. Employee Enablement (25 points)

This pillar quantifies AI’s productivity boost for staff using a single, observable metric: hours saved per employee per month. The formula is conceptually:

Hours saved = ((Pre-AI time per task − Post-AI time per task) × tasks per period) ÷ 60 (to convert minutes to hours), averaged across employees.

Scoring mapping: 0→0; 1-2→6; 3-5→12; 6-10→19; >10→25. This metric makes internal efficiency tangible and helps surface adoption value when employees see their own time return.

Example calculation: An AI assistant reduces average research time from 20 minutes to 12 minutes per request. If a typical employee makes 25 such requests per month, the time saved per employee is ((20 − 12) × 25)/60 = (8 × 25)/60 = 200/60 ≈ 3.33 hours. That fits into the 3-5 bucket, yielding 12 points toward the score.

Edge case: Innovative capabilities with no immediate time savings. Some AI initiatives create entirely new capability and do not yet yield measurable hours saved. To avoid treating these as zero value, the framework allows a temporary Innovation Mode adjustment within this pillar. When hours saved is zero, projects can earn up to 15 provisional points based on a simple rubric:

Prototype validated and internal stakeholder buy-in: 5 points

Pilot integrated into workflows or active internal testing: 5 points

Strategic alignment with a documented roadmap to future enablement (e.g., planned automation that will produce hours saved): 5 points

This gives early credit while keeping the eventual target (real hours saved) in view. Once the tool starts delivering measurable time savings, the provisional score is retired and replaced with the standard hours-saved score.

Risk Penalty & Gate Check

Risk Penalty (−10): Material incident (e.g., compliance breach, bias event) within 12 months.

Gate Check: Each pillar must hit 40% of its max (F ≥20; C ≥10; E ≥10). If any fail, remediate that area before further investment.

Decision Bands & Actions

Benefits of FCE™

Rapid alignment around three core metrics.

Unambiguous scoring eliminates ROI debates.

Balanced perspective across financial, customer, and employee impacts.

Minimal overhead: few inputs, instant scoring.

Action‑oriented: clear gate and bands guide next steps.

Get Started Today

Download The FCE™ Framework Toolkit

Final Words

The FCE™ framework is designed to strip away complexity while preserving the essential dimensions of AI value: financial payoff, customer loyalty, and employee productivity. By using just three clear metrics and fixed thresholds, it makes assessment immediate and comparable across initiatives, enabling better prioritization and faster scaling. Its built-in gate and decision bands guard against over-investing in one area while neglecting others, and the optional risk penalty ensures responsible deployment. Start small, measure consistently, and let the FCE™ score drive disciplined, high-impact AI adoption.