Reverse Prompt Engineering Framework (RPEF) - Deliver Consistent Results with LLMs

Most LLM prompts fail at scale. Discover Reverse Prompt Engineering and turn great outputs into consistent, reusable workflows.

TL;DR

Getting one good answer from an LLM is easy. Getting consistently good answers is hard. Reverse Prompt Engineering is a practical way to turn successful outputs into reusable systems, letting LLMs handle structure and repetition while humans focus on judgment and context.

Why Consistency With LLMs Is Still Hard

Many professionals using tools like ChatGPT, Gemini or Claude on daily basis. The real challenge is no longer adoption, it is reliability.

Most teams have experienced the same pattern. An LLM produces a great result once, but the next attempt requires rewrites, prompt tweaking, and manual correction. Over time, the effort shifts from doing the work to managing the prompts.

High-quality output still depends heavily on human input. Refinement, iteration, and careful phrasing remain essential. This is where many people feel stuck. They do not want to become prompt engineers, yet they need consistent results.

Based on hands-on testing in real workflows, there is a more effective way to approach this problem.

Instead of starting with prompts, start with outcomes.

That shift in thinking is the foundation of the Reverse Prompt Engineering Framework (RPEF).

What Is Reverse Prompt Engineering?

Reverse Prompt Engineering is a method that works backwards from proven results.

Rather than asking how to write the perfect prompt, it asks a different question:

How can an LLM recreate outputs like this again when given similar inputs?

By using real inputs and real outputs, the LLM can infer structure, expectations, and decision logic on its own. The result is a reusable meta-prompt that captures how the task should be done.

This turns one successful example into a repeatable system.

The Reverse Prompt Engineering Framework (RPEF)

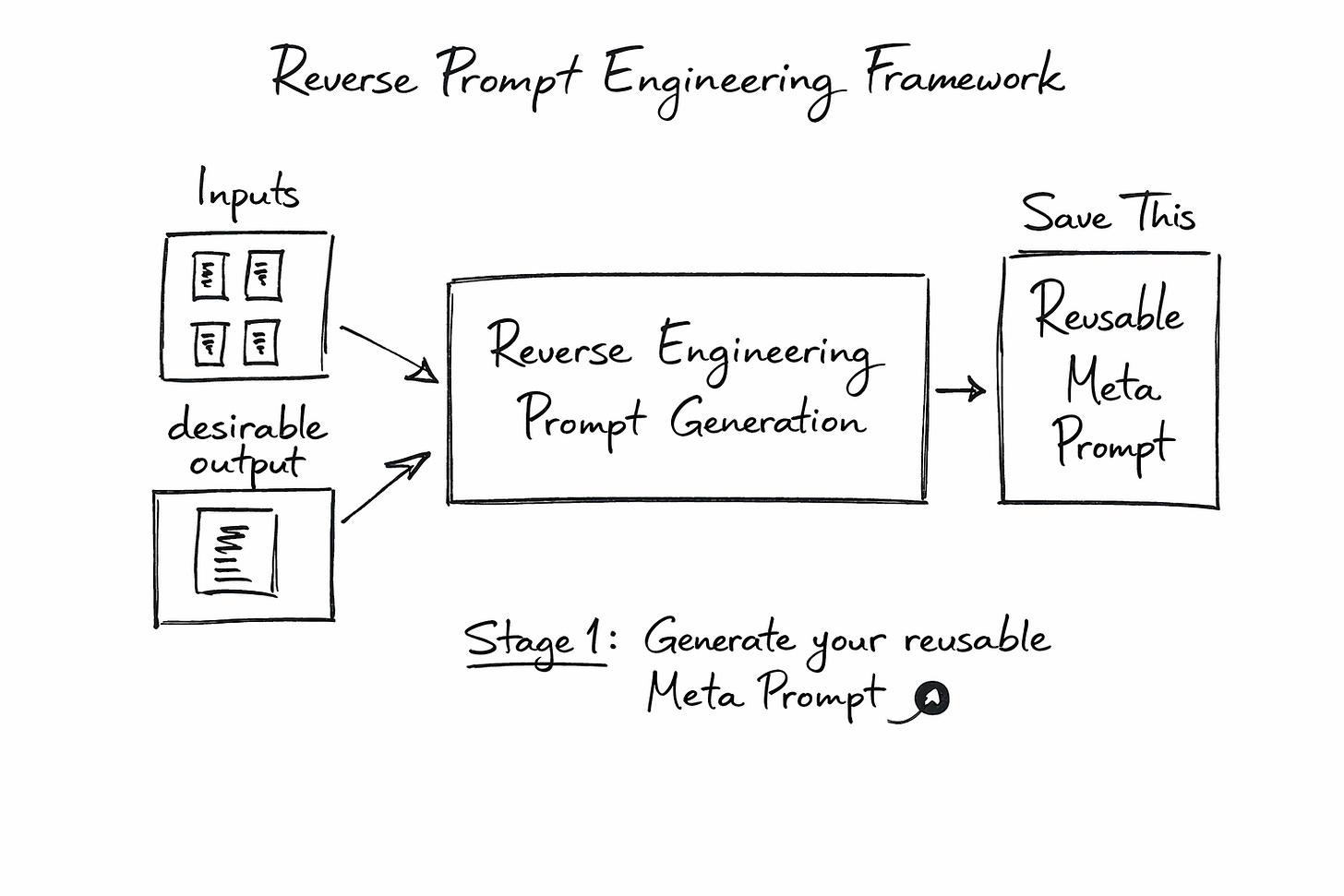

The framework consists of two clear stages. Together, they transform one-off success into consistent performance.

Stage One: Generate a Reusable Meta-Prompt

Every knowledge task follows the same basic flow. You start with inputs, you perform some form of reasoning or synthesis, and you produce an output you are confident sharing.

Stage One assumes you already have a strong example of completed work.

You take the original inputs and the final output and ask the LLM to reverse-engineer the prompt that could reliably produce similar results. The LLM analyses what went in, what came out, and how decisions were implicitly made.

The result is a reusable meta-prompt.

A meta-prompt is not content. It is instruction. It encodes structure, tone, constraints, quality thresholds, and decision rules that would otherwise live only in a human’s head.

Use following prompt to generate Meta Prompt

You are an expert Prompt Engineer.

Your task is to **reverse-engineer a reusable prompt** using:

- Raw input data (provided below)

- A desired output (also provided below)

---

## Instructions

1. Analyze the relationship between the input and the output.

2. Infer tone, structure, logic, and formatting patterns.

3. Identify the essential information needed to recreate the output.

4. Ask clarifying questions if any data is missing or unclear.

5. Proceed only when you are **90% confident** in your understanding.

6. Write a **copy-paste-ready prompt** that can generate similar output from similar input.

---

## Provided by the User

**INPUT DATA (raw):**

{Paste raw input here}

---

**OUTPUT (desired result):**

{Paste example output here}

---

## Your Response

Return a reusable prompt that:

- Replicates the tone, style, and structure of the output.

- Works with new, similar inputs.

- Includes placeholders for user input.

Once created, this meta-prompt becomes an asset. It can be reused whenever the same type of task appears again.

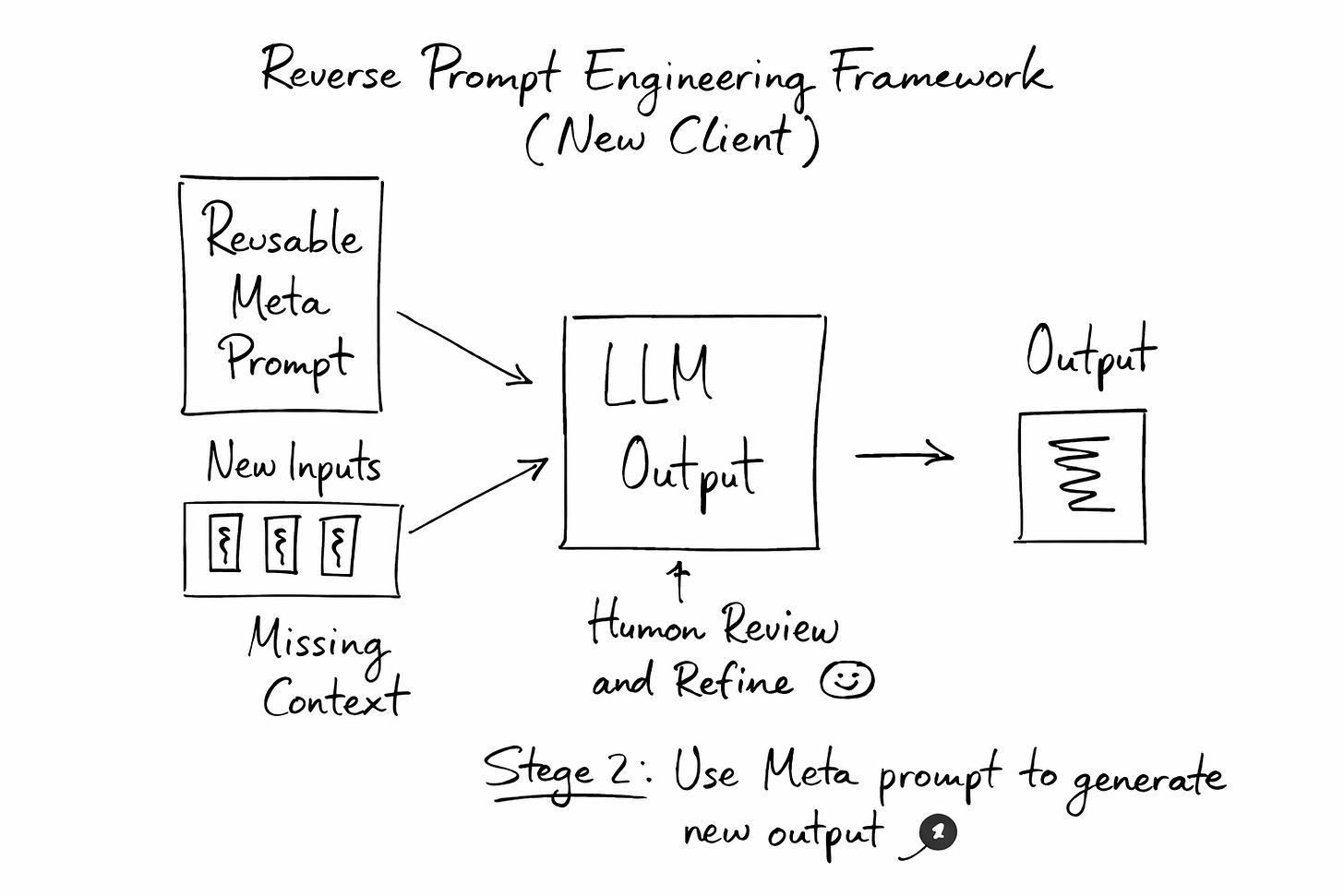

Stage Two: Reuse the Meta-Prompt to Generate Outputs

Stage Two is where the actual job is done.

Instead of starting from a blank page, you start with the reusable meta-prompt and new inputs. The LLM may ask for clarification or additional context, which you provide. It then generates a first-pass output aligned with the original quality standard.

At this point, the human role changes. You are no longer drafting from scratch. You are reviewing, adjusting, and applying context.

This separation matters. LLMs excel at structure and repetition. Humans excel at judgment and nuance.

Real-World Example: Press Releases

This framework has been tested in live production work.

In one case, I worked with a team from PR agency, responsible for press releases used real client inputs and the final press releases sent to media outlets. These were provided to an LLM to generate a reusable meta-prompt for press release creation. This end up with a Meta Prompt with a length of around 1000 words

Once the meta-prompt was created, the same inputs were reused. The LLM generated a new press release using only the meta-prompt.

The output was reviewed by the professionals who normally write these releases. They rated the result 7 out of 10.

That score is important. It was not perfect, but it was good enough to act as a strong foundation. With human editing and contextual awareness, the team could efficiently turn it into a 10 out of 10 final release.

The value was not replacement. It was speed, consistency, and reduced cognitive load.

A Few Examples and Ideas

The same approach applies to many repeatable knowledge tasks.

Internal strategy summaries can use research notes and meeting transcripts as inputs, producing consistent executive-ready outputs.

Marketing or campaign briefs can follow the same structure every time, regardless of who prepares them.

Educational or explainer content can maintain tone, depth, and clarity across multiple pieces without constant prompt tweaking.

In each case, the principle remains the same. One strong example becomes the blueprint for many.

Final Thoughts: A Smarter Way to Work With LLMs

LLMs are becoming more capable, but the real shift is happening in how we use them.

Reverse Prompt Engineering shows that consistency does not require prompt engineering expertise. It requires strategic thinking and a willingness to work backwards from success.

This approach allows LLMs to handle the heavy lifting of structure and repetition, while humans stay focused on judgment, creativity, and context.

If you try this framework, test it in your own work. Notice where it saves time and where it still needs human input.

Most importantly, share what you learned.