How to Create a Movie with AI for 48 hours

Discover how I created a short movie using AI tools like Runway and MidJourney in just 48 hours. Learn the process and tips.

This newsletter is going to be a bit different from my usual content. I'm excited to share my personal experience of participating in the Runway Gen:48 short movie challenge. Over the past weekend, I took part in this exhilarating competition run by Runway, pushing the boundaries of AI-assisted filmmaking.

What is Runway?

For those unfamiliar, Runway is an innovative platform focusing on generative media and artificial intelligence. It enables creators to produce high-quality video content through advanced AI tools. Their latest iteration, Gen-3 Alpha, enhances video generation capabilities, allowing users to create expressive and photorealistic human characters, dynamic scenes, and intricate narratives.

The Challenge

The Runway Gen:48 3rd edition is a short film competition where participants create a film using AI tools within a tight 48-hour timeframe. Here are the key rules:

Films must be 1-4 minutes long.

All generative video must be created using Runway Gen-3 Alpha.

Participants must own the rights to any live-action footage incorporated.

Music and sound design should be from the provided Epidemic Sound access or owned by the creator.

Content must not be offensive, graphic, or explicit.

The challenge was announced Saturday morning at 9 AM, so no one knew the specifics in advance. Each film had to contain one selection from each of these three categories:

Inciting Incident:

A natural disaster

A character's disappearance

Heartbreak

A physical transformation

A family reunion

A graduation

Archetype:

A lone parent

A long lost lover

A mischievous creator

A dark entity

An ethereal creature

An unbreakable leader

Location:

Wilderness

Limbo

Time-Shifted Realm

Tropical Paradise

Medieval Europe

Underwater

Let's Get Started

So there I was on Saturday morning – no team, just myself, my laptop, and my notebook.

Challenge myself

Update skills on Runway and MidJourney

Create processes for automated (AI-assisted) movie video production

Craft a compelling story

Learn video editing

Have fun!

I also outlined my building blocks:

Story

Storyboard

Prompts for MidJourney

Prompts for Runway

Prompts for Sound

Full Editing in DaVinci Resolve

As the clock started ticking, I made my category selections:

Story

This was arguably the most critical part of the challenge. You can use amazing technology to create stunning visuals, but it's the story that truly grabs attention. As one of my main goals was to define a process for production, I started by creating a custom GPT fine-tuned with known storytelling frameworks. This took about 2 hours, including research and finding data for fine-tuning the model. Then, it took another 2-4 hours until I was happy with the final version of the story and narrator's script. The whole process was a mix of initial ideas developed with the help of my custom GPT.

Storyboard

I used the same strategy here – pre-trained the model with best practices for creating storyboards, plus templates and examples. I ran my script to get Actions (I had a total of 4) and 24 Shots. One of my requirements was to keep all shots up to 3 seconds, considering that the whole movie should be about 2 minutes long. As a solo player, I knew I wouldn't have time to edit a longer form, so I needed to keep it simple.

Prompts for Midjourney and Runway

The next step was to convert the storyboard – a total of 24 shots – into images and videos. I fine-tuned another custom GPT with best prompting practices for Midjourney and Runway, programming it to use the input storyboard for creating prompts. While not perfect, it was a good starting point.

Voiceover and Sound Effects

At this stage, I decided to create the main storyteller's voice, as this would be a crucial part when putting all the pieces together. I used Eleven Labs for this, finding a male voice I liked and generating the voiceover. To make it work well, you need to play with punctuation to get good intonation, as well as edit it manually at the next stage. Later, during the actual production stage, I generated all the sound effects I needed – heavy breathing, footsteps, clock ticking, etc. – in Eleven Labs as well.

Generating Images and Videos + Putting It All Together

More than 24 hours had passed since the beginning, and while I had the foundation, I hadn't actually created a single image or video. So, I dove into the actual production.

Next, I created images based on prompts, found ones I was happy with for specific shots, upscaled them in Midjourney, used them as sources in Runway, and created videos. Once satisfied, I downloaded the videos and imported them into DaVinci Resolve. I used a strict naming convention to avoid getting lost, with every action and shot named in a way I could easily differentiate in the storyboard.

Through the process, I also figured out that I got better results when generating 10-second videos (they looked less static and had more action) compared to 5-second videos. So from one stage, I started generating only 10-second videos, which slowed down the process.

Music

With everything together, I wondered: Do I need music or not? I had an idea of what music would fit best, and because I had time, I decided to try it out. I used

Final Bit and Result

So that was it – render and upload.

What I've Learned and Developed

A whole process for video production

Storytelling Custom GPT

Storyboard Custom GPT

Prompt Generator for Midjourney and Runway

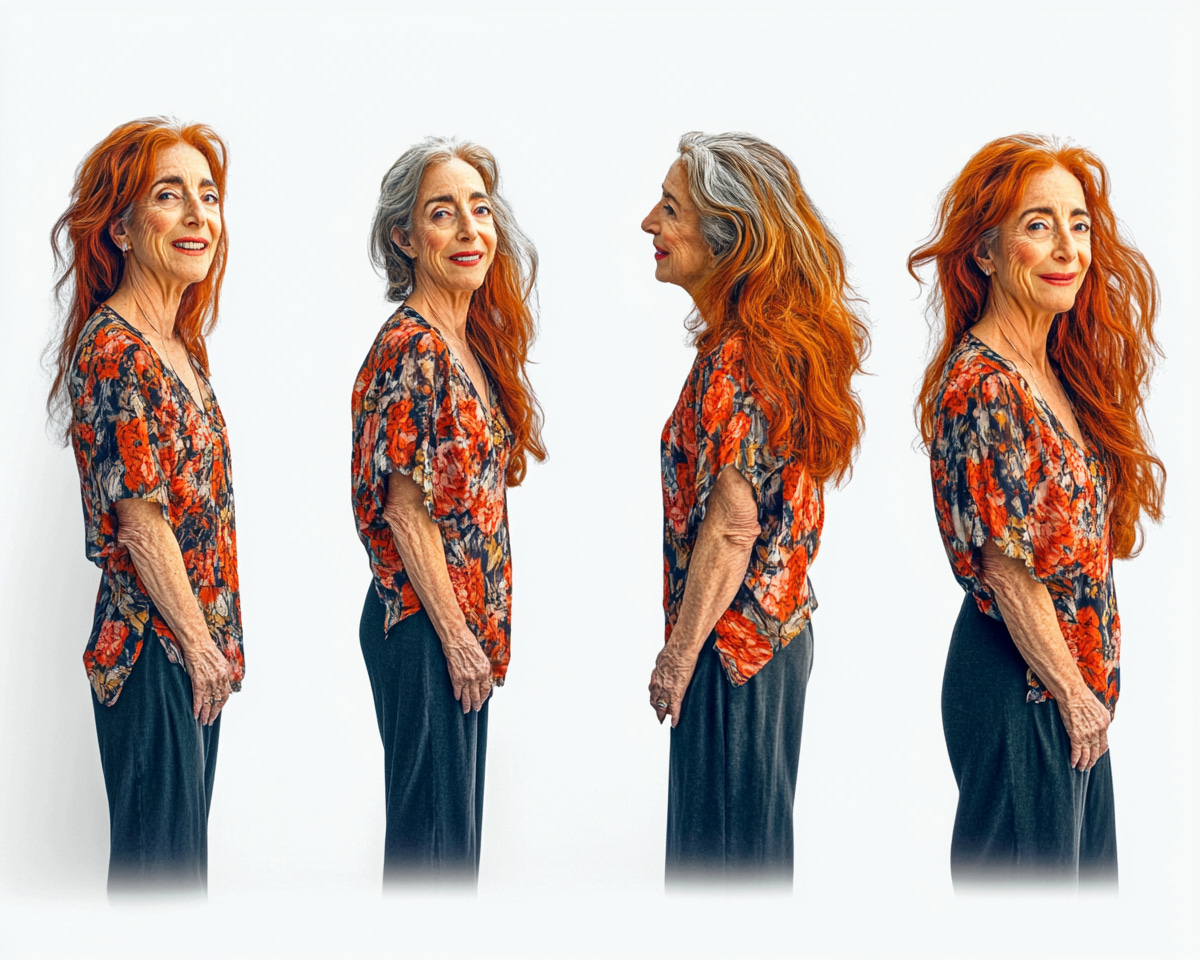

Creating consistent characters in Midjourney

Editing Video in DaVinci Resolve

Final Thoughts

This challenge was an incredible journey into the future of filmmaking. With over 3,500 people competing across 3,000 teams, it's clear that AI-assisted movie creation is capturing the imagination of creators worldwide. You can see some of the videos in the playlist below

https://youtube.com/playlist?list=PLb7JnVIVPr6W9IIVjlIrpaKwCO42R6m2o&si=NoESyqPU6f1xS12q

Looking ahead, I believe we're only scratching the surface of what's possible with AI in filmmaking. As tools like Runway and Midjourney continue to evolve, we'll likely see even more sophisticated integration of AI in various aspects of movie production. From script generation to real-time visual effects, the potential is enormous.

However, it's important to note that while AI can significantly enhance and streamline the filmmaking process, it doesn't replace human creativity. If anything, this challenge showed me that the human touch – in storytelling, in making creative decisions, and in bringing emotional depth to a project – is more crucial than ever.

As we move forward, I anticipate we'll see a new breed of filmmakers emerge – those who can seamlessly blend traditional filmmaking skills with AI expertise. This fusion could lead to entirely new forms of storytelling and visual experiences that we can barely imagine today.

If you have any question get in touch https://bit.ly/3TAzMWy