How Multimodal AI Will Transform Human-Machine Interaction Forever

How multimodal AI is changing user experiences, accessibility, and contextual understanding across industries.

This week, two major tech companies made groundbreaking announcements in the field of artificial intelligence (AI). OpenAI introduced ChatGPT-4o, the first truly multimodal model capable of real-time reasoning across text, audio, and visual data. This hyper-realistic conversational AI interface is indistinguishable from human interaction.

Google unveiled Project Astra at the Google I/O event. Astra is Google's vision for a future AI assistant powered by their Gemini model, which also supports multimodal inputs like audio, text, images, and video in real-time. While still a prototype, Astra offers a glimpse into the next generation of human-machine communication.

The emergence of these advanced multimodal AI systems begs an intriguing question - How will our interactions with machines fundamentally change? And what implications will this have for enhancing efficiency, accessibility, and our overall relationship with technology?

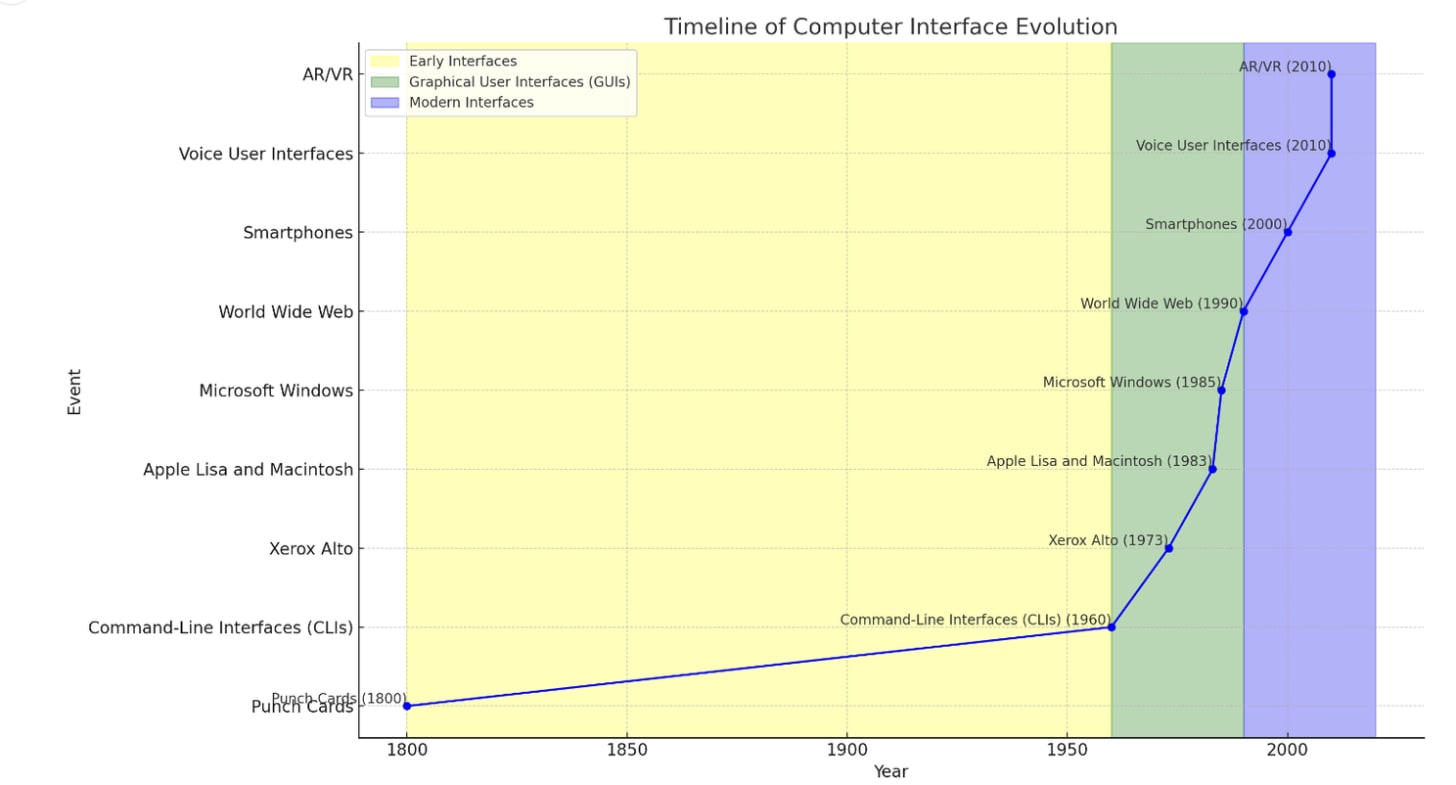

A Brief History of User Interfaces

The history of computer interfaces has evolved significantly over time, transitioning from punch cards and command-line interfaces to graphical user interfaces (GUIs) and more advanced interfaces like touch screens, voice assistants, and augmented/virtual reality.

Early Interfaces:

- Punch Cards (Early 1800s - 1970s): One of the earliest interfaces, punch cards contained holes representing data and were used to control automated machinery and input data into computers.

- Command-Line Interfaces (CLIs) (1960s - 1980s): Users interacted with computers by typing text commands, requiring memorization of specific commands and syntax. CLIs were powerful but less accessible for average users.

Graphical User Interfaces (GUIs):

- Xerox Alto (1973): Considered the first computer with a GUI, it introduced windows, menus, radio buttons, and checkboxes.

- Apple Lisa and Macintosh (1983-1984): Apple popularized the GUI with the Lisa and Macintosh, featuring icons, windows, and a mouse.

- Microsoft Windows (1985): Microsoft's Windows operating system brought the GUI to a wider audience, initially lacking overlapping windows but evolving with subsequent versions.

Modern Interfaces:

- World Wide Web (1990s): Web browsers became a new interface for accessing information.

- Smartphones (2000s): Touch interfaces with gestures like pinch, swipe, and tap became standard with the rise of smartphones like the iPhone.

- Voice User Interfaces (2010s): Voice assistants like Siri, Alexa, and Google Assistant enabled natural language interaction with technology.

- Augmented/Virtual Reality (AR/VR) (2010s): AR and VR interfaces provide immersive experiences by blending virtual and real worlds, with applications in gaming, education, healthcare, and more.

This graph was made entirely in ChatGPT using a series of prompts based on the information provided in the text above.

What's Next and How the Near Future Looks Like

Over the next few years, we are going to see a boom of AI Assistants or AI Agents - every person and organization will likely have one or multiple of these advanced AI companions. These AI Assistants represent the next evolution of digital assistant systems we already know, like Apple's Siri or Amazon's Alexa, except they will be super personalized (knowing a lot about each individual user), incredibly intelligent, highly creative, and emotionally intelligent with distinct personalities.

How multimodal AI will change our interaction with machines

Enhancing User Experiences

One of the most tangible benefits of multimodal AI is an enhanced, more intuitive user experience that mirrors how humans communicate. Virtual assistants will comprehend spoken commands seamlessly interleaved with contextual visual information. Chatbots will interpret shared images and documents alongside text to provide more relevant and insightful responses.

Improving Accessibility

By allowing machines to perceive and interact through speech, gestures, facial expressions and other modalities, multimodal AI opens up new avenues of accessibility. Individuals with diverse abilities and needs, including those with disabilities or neurological conditions, can communicate with technology in ways that work best for them.

Contextual Understanding

Multimodal AI models analyze and correlate information from multiple sources - speech, text, imagery, and video - to build rich contextual models. This 360-degree perspective allows for more nuanced and accurate interpretations compared to uni-modal AI focused solely on one data stream.

Versatile, Cross-Platform Experiences

One of the inherent strengths of multimodal AI is its ability to process and generate content across different modalities seamlessly. This versatility enables a range of powerful cross-platform applications not possible with uni-modal AI approaches.

7 Pioneering Future Applications I Would Like to See

While multimodal AI is still in its nascent stages, the possibilities for transformative applications across sectors are immense:

Advanced Health Monitoring: AI that analyzes multiple data points like voice, facial expressions, and movement to predict and diagnose health issues early, improving outcomes through proactive healthcare. These systems could detect subtle cues in speech, facial micro-expressions and motions that may indicate the onset of conditions like depression, Parkinson's and more.

AI Tutor Assistant: Tools that adapt to a student's learning style in real-time by analyzing verbal questions, written work, and non-verbal cues, providing personalized feedback and support tailoring learning materials dynamically. By understanding confusions and knowledge gaps through multimodal inputs, the AI tutor can adjust its teaching approach accordingly.

Emergency Response: AI that integrates visual data from traffic/security cameras, audio of 911 calls, responders' verbal logs and more to rapidly assess emergency situations and direct resources and personnel most effectively. This unified multimodal awareness can potentially save precious time and lives.

Entertainment Assistant: AI tools that adapt media experiences in real-time based on the viewer's emotional responses and engagement levels detected through facial expressions, body language, biomarker tracking and more.

Workplace Collaboration Agent: AI that understands and mediates team dynamics by analyzing speech patterns, facial expressions, body language and other modalities to optimize communication, group interactions and productivity. The agent could provide real-time feedback to teams on improving collaboration and working relationships.

AI Meeting Facilitator: Not only transcribing meetings, but also analyzing participant expressions, tones and engagement through audiovisual signals to better guide discussions and ensure productive outcomes. The facilitator could interject when energy levels dip or to refocus dialogue.

Dynamic Task Management: AI that comprehends verbal updates, emails, chats and even physical presence data to dynamically prioritize tasks and schedules based on the user's workload, stress levels, and time constraints. By understanding the full context, the AI could ensure optimal personal productivity.

Final Words

The rise of multimodal AI heralds a new era in human-machine interaction – one where our engagement with technology becomes increasingly natural, accessible and contextualized. By seamlessly integrating multiple communication channels like speech, text, visuals and more, this advanced AI will redefine how we operate, learn, work and thrive.