AEO, GEO and LLMO: 22 FAQ for AI-Driven Discovery

Master AEO, GEO & LLMO to boost brand visibility in AI-driven search. Learn strategies for citations, snippets & authority.

TL;DR — What This Guide Covers

This FAQ explains the fundamentals of AEO, GEO, and LLMO. What they are, why they matter, and how to optimize your content for AI-powered discovery. You’ll learn:

The core differences between AEO, GEO, and LLMO, including how they target featured snippets, AI-generated responses, and model-level understanding.

How to structure content so AI can extract and cite it as the definitive answer.

The exact formats that work best for answer engines and LLMs, like FAQs, how-tos, comparison tables, and list structures.

Key tactics for accuracy, personalization, and conversational queries.

Why schema markup matters — including the most important structured data types.

How to measure success using new metrics like citation frequency, authority, and share of voice in AI responses.

Team skills required to execute AI search optimization effectively.

In short: this guide teaches you how to be selected, cited, and trusted by AI, not just ranked by search.

Fundamentals & Definitions

What is Answer Engine Optimization (AEO) and how does it differ from traditional SEO?

Answer Engine Optimization (AEO) structures content so AI-powered answer engines can directly extract and present it as definitive responses rather than just listing links. While traditional SEO focuses on ranking web pages to drive clicks through keywords, backlinks, and click-through rates, AEO prioritizes becoming the single answer in zero-click environments like featured snippets, voice assistants, and AI Overviews.

Key differences:

SEO = optimize for rankings and clicks

AEO = optimize for direct answer extraction and zero-click satisfaction

AEO requires immediate, concise answers (first 2-3 sentences), clear hierarchical headings, FAQ/HowTo schema markup, and conversational language that voice assistants can read naturally

What is Generative Engine Optimization (GEO) and why is it important?

Generative Engine Optimization (GEO) is the strategic process of adapting digital content to improve visibility within AI-generated responses from platforms like ChatGPT, Gemini, and Perplexity. GEO ensures your brand is cited, mentioned, and recommended when AI systems synthesize information from multiple sources to answer complex queries.

Importance: As AI-driven search becomes the primary discovery method, GEO is critical for maintaining brand authority and capturing user attention in an environment where traditional rankings matter less than being referenced by trusted AI systems. Success is measured by citation frequency, attribution accuracy, and share of voice in AI responses rather than search result positions.

What is Large Language Model Optimization (LLMO) and why is it becoming essential?

Large Language Model Optimization (LLMO) tailors content, brand presence, and technical infrastructure to appear in AI-generated responses from large language models. LLMO focuses on making content retrievable, quotable, and referenceable by training AI systems to recognize your brand as an authoritative source through semantic clarity, entity recognition, and structured data.

Essential because: With zero-click searches now accounting for the majority of US queries, LLMO is critical for future-proofing visibility as users increasingly rely on conversational AI rather than traditional search results. LLMO ensures your content is represented accurately in AI-generated responses and maintains competitive position as AI becomes the primary interface for information retrieval.

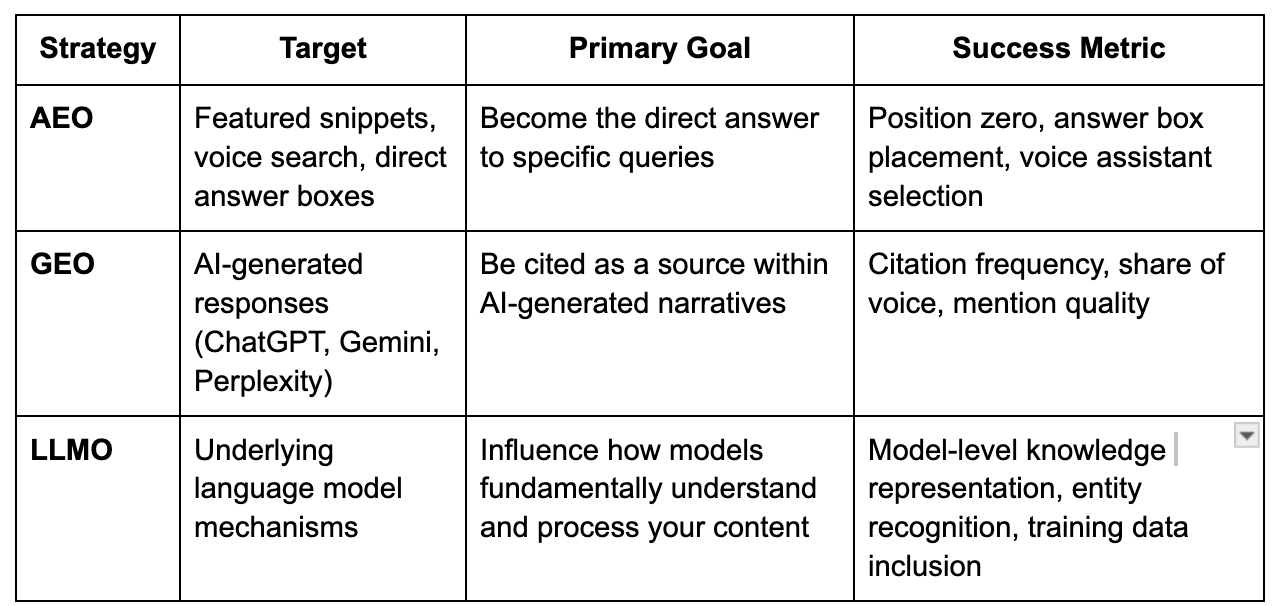

What are the core differences between AEO, GEO, and LLMO?

AEO — Targets featured snippets, voice search, and direct answer boxes to become the direct answer to specific queries, measured by position zero, answer box placement, and voice assistant selection.

GEO — Targets AI-generated responses (ChatGPT, Gemini, Perplexity) to be cited as a source within AI-generated narratives, measured by citation frequency, share of voice, and mention quality.

LLMO — Targets underlying language model mechanisms to influence how models fundamentally understand and process your content, measured by model-level knowledge representation, entity recognition, and training data inclusion.

Scope progression: AEO targets answer extraction → GEO targets citation in generated responses → LLMO targets model-level influence through semantic relationships and vector embeddings.

How does AEO specifically target zero-click and voice search results?

AEO targets zero-click searches by providing immediate, concise answers within the first 2-3 sentences of content, using question-based headers and structured data that AI engines can extract without requiring users to click through.

Voice search optimization:

Use conversational language patterns and natural speech queries

Implement FAQ schema and “How-To” guides with step-by-step instructions

Create long-tail question phrases that voice assistants like Siri and Alexa can easily read aloud

Ensure mobile responsiveness and fast-loading pages

Use Speakable schema for news content

How do “citations” and “mentions” replace “rankings” as the success metric in GEO?

In generative AI environments, traditional page rankings become irrelevant because AI models synthesize information from multiple sources and present cohesive responses rather than lists of links. Success is now measured by:

Citation frequency: How often your brand/content appears in AI-generated answers

Attribution accuracy: Whether AI properly credits your brand

Share of voice: Your brand’s presence versus competitors in AI responses

Positioning: Being the first cited source represents the new “Position 1”

These metrics measure brand authority and visibility within AI answers, reflecting the shift from traffic-driven to awareness-driven optimization.

Content Strategy & Optimization

How do I structure my content to appear in AI-generated answers?

Structure content with immediate direct answers in the first 2-3 sentences, followed by comprehensive supporting details. Use clear hierarchical headings (H1 → H2 → H3) that mirror natural language queries.

Implementation:

Place direct answers near the top using concise paragraphs of 40-60 words

Use the inverted pyramid style where key information comes first

Implement question-and-answer formats, bulleted lists for easy extraction, summary boxes, and table formats for comparative data

Include clear topic sentences, use semantic HTML markup, and ensure each section can stand alone as a complete answer

Pro tip: Content with hierarchical headings gets cited nearly 3× more often than unstructured content.

What content formats are most effective for answer engines and generative AI?

Most effective formats:

FAQ pages and Q&A sections: Mirror how users ask questions directly

How-to guides with step-by-step instructions: AI can easily extract sequential information

Comparison tables: Excellent for summarizing differences or pros/cons

Listicles and bullet-point lists: 80% of articles cited by ChatGPT contain lists vs only ~28% of top Google results.

Definition paragraphs: Concise definitions (e.g., “X is defined as...”) can be quoted directly for “What is X?” queries

Long-form comprehensive guides: Demonstrate topical authority

Multi-modal content: Videos with transcripts, infographics with descriptive alt text, audio with transcripts

What specific writing styles or patterns do LLMs best understand?

LLMs best understand conversational, natural language that uses complete sentences and mirrors how people actually ask questions.

Best practices:

Use question starters like “Who,” “What,” “Where,” “When,” “Why,” and “How”

Keep paragraphs short and factual (2-3 sentences) that state facts clearly and early

Use simple, direct language; avoid jargon without explanation

Include clear entity definitions and consistent terminology

Use active voice and explicit logical connections with transition words

Format key terms with bold or italics to signal importance

Cite authoritative sources within your text to build trust

Write with high “perplexity” (uniqueness) but low “burstiness” (consistency) for machine comprehension

How can brands optimize content for conversational queries in AI chat agents?

Create content that anticipates follow-up questions and provides comprehensive, contextually rich answers that address user intent beyond the initial query.

Optimization tactics:

Conduct thorough question research using People Also Ask, Reddit threads, Quora, and forums

Incorporate natural-language questions verbatim into your content as subheadings

Adopt a conversational tone using active voice and second person (”you”)

Include practical examples that demonstrate application of concepts

Structure content to flow logically through related topics

Address long-tail, multi-part questions that include specific details

Build topical authority through interconnected content clusters demonstrating expertise across related subjects

Should I optimize existing content or create new content for AEO/GEO/LLMO?

Do both. Audit and optimize high-performing existing content first to maximize immediate gains, then create new content to fill gaps in topic coverage and target emerging queries.

Optimization approach:

Existing content: Add concise answer sections, FAQ blocks, schema markup, entity clarification, and updated information

New content: Address conversational queries not currently covered, demonstrate unique expertise, and build topical authority

Prioritize cornerstone content and high-traffic pages for optimization while simultaneously developing new content for visibility gaps

How do personalization and user intent affect AI-driven visibility?

AI models increasingly tailor responses based on user context, conversation history, and inferred intent, making it essential to create content that serves multiple intent types (informational, navigational, transactional).

Impact on strategy:

Cover multiple intent angles in your content (e.g., “for beginners... for advanced users...”)

Use phrasing that aligns with intent categories (”how to” for informational, “best X” for commercial investigation)

Ensure content is comprehensive enough to match various user contexts while maintaining clear topical focus

Build breadth of content (basic to advanced topics) so AI can select the piece that best fits the user’s level

Align content precisely with user intent to increase selection likelihood

How do I ensure content accuracy when LLMs summarize or paraphrase it?

Write with extreme clarity using unambiguous language, clearly delineated facts, and explicit statements that leave no room for misinterpretation.

Accuracy safeguards:

Be explicit and clear; avoid ambiguous metaphors or complex jargon

Provide context for statements to make them self-contained

Include clear disclaimers and date stamps for time-sensitive information

Use consistent terminology and proper attribution for claims

Structure key information to resist distortion through paraphrasing

Regularly update content to ensure accuracy and use fact-check markup where appropriate

Limit complex, compounded sentences; break up long sentences with multiple clauses

What skills or team roles are required to execute LLMO effectively?

Successful LLMO requires cross-functional collaboration between specialized roles:

Core team composition:

Content strategists who understand AI behavior and semantic relationships

Technical SEO specialists familiar with structured data, schema implementation, and entity markup

Subject matter experts who create authoritative, information-rich content

Data analysts who track AI citations, mentions, and performance metrics

Developers who implement technical optimizations and ensure crawlability

Brand strategists who monitor share of voice and ensure consistent authority-building

Key requirement: Seamless collaboration between content, SEO, engineering, and product teams for comprehensive implementation.

Technical Implementation

Which answer engines and AI platforms should brands prioritize?

Prioritize the “big four” platforms that command the largest user bases:

Google AI Overviews and SGE - reaches all Google users

ChatGPT - dominant conversational AI with search capabilities

Microsoft Copilot - integrated into Office ecosystem

Perplexity AI - growing research-focused platform

Selection criteria: Choose based on your target audience’s usage patterns. Monitor emerging platforms like Claude and Gemini, but focus resources on platforms that currently drive the majority of AI-driven discovery. Industry-specific platforms may also warrant attention (e.g., AI coding assistants for technical brands).

What role does schema markup and structured data play in AEO/LLMO success?

Schema markup is critical for AEO/LLMO success as it provides explicit, machine-readable context that helps AI systems accurately understand and categorize your content’s meaning, relationships, and key information.

Impact:

Acts as a “definitive translator,” explicitly telling the AI “this text is a price” or “this person authored this article,” removing ambiguity

Dramatically increases likelihood of content being featured in rich results, answer boxes, and AI citations

Enables AI to quickly identify entities, facts, relationships, and content types without interpreting unstructured text

Reduces ambiguity and increases extraction accuracy compared to sites without structured data.

What structured data types are critical for AI-driven search understanding?

Most critical schema types:

FAQPage and HowTo - ideal for direct answer extraction

Article with author and organization markup - establishes authority

Product, Review, Event - essential for commercial queries

Organization and Person - establishes entity relationships and authority

BreadcrumbList and SiteNavigationElement - helps AI understand site architecture

Speakable - specifically targets voice search

ClaimReview - for fact-checking content

Dataset - for structured information

sameAs references - establish entity identity across the web

Specialized schemas like MedicalCondition or Recipe - provide domain-specific clarity

Measurement, ROI & Competitive Strategy

How do AI Overviews impact organic search traffic?

AI Overviews significantly reduce click-through rates to websites by providing comprehensive answers directly in search results, potentially decreasing traditional organic traffic by 20-60% for informational queries. However, the impact varies:

Informational queries: Experience the steepest traffic declines

Transactional queries: Maintain better click-through rates as users seek to complete purchases

Brand benefit: Websites cited in AI Overviews can experience credibility boosts, increased branded searches, and higher-quality traffic from users seeking deeper information.

How can GEO influence website traffic and conversions?

GEO typically reduces direct website traffic from information-seeking queries since users receive answers without clicking through, but it significantly increases brand awareness, authority, and indirect traffic.

Conversion impact:

When AI models cite your brand, you gain credibility that leads to branded searches, social mentions, and higher conversion rates

Users who do click through arrive with greater intent and are further along in their decision journey

Focus shifts from traffic volume to traffic quality, with better-qualified leads

Brand mentions in AI answers build trust throughout the buyer’s journey, influencing purchase decisions even without immediate clicks.

Should GEO and SEO use separate content strategies?

No. Develop an integrated approach where content simultaneously serves traditional SEO and GEO objectives through comprehensive, authoritative coverage with flexible formatting.

Unified strategy benefits:

Core principles (quality, relevance, E-E-A-T, user value) apply to both

Prevents conflicting optimization efforts while maximizing visibility across all discovery channels

Tactical implementations may vary: schema markup, content structure, and optimization priorities can be adapted for each paradigm

Most effective approach optimizes content for both paradigms simultaneously.

How important is topical authority for LLMO visibility?

Topical authority is critically important for LLMO because AI models favor content from sources that demonstrate consistent expertise, comprehensive coverage, and credibility within specific subject areas.

Building authority:

Create extensive, interlinked content clusters on related subjects

Signal to AI models that your site is a definitive source worthy of citation

Depth and breadth of coverage on focused topics outperforms scattered content across unrelated areas

Without clear topical authority, AI models may not recognize your brand as a trusted source, reducing citation likelihood even for individual high-quality pages.

How should companies align SEO, GEO, and LLMO into one strategy?

Create a unified content excellence framework where high-quality, authoritative content serves as the foundation for all three optimization types, with tactical variations in formatting and technical implementation.

Integration approach:

Develop integrated workflows where content teams simultaneously optimize for traditional search visibility, AI citation potential, and long-term model training influence

Establish shared KPIs around brand authority, content comprehensiveness, and multi-channel visibility rather than siloed metrics

Ensure technical teams implement solutions that serve all optimization objectives simultaneously (schema markup, entity clarity, site structure)

Track combined metrics including organic traffic, AI citations, brand mentions, and assisted conversions.